Artificial Neural Network vs. Support Vector Machine For Speech Emotion Recognition

Main Article Content

Abstract

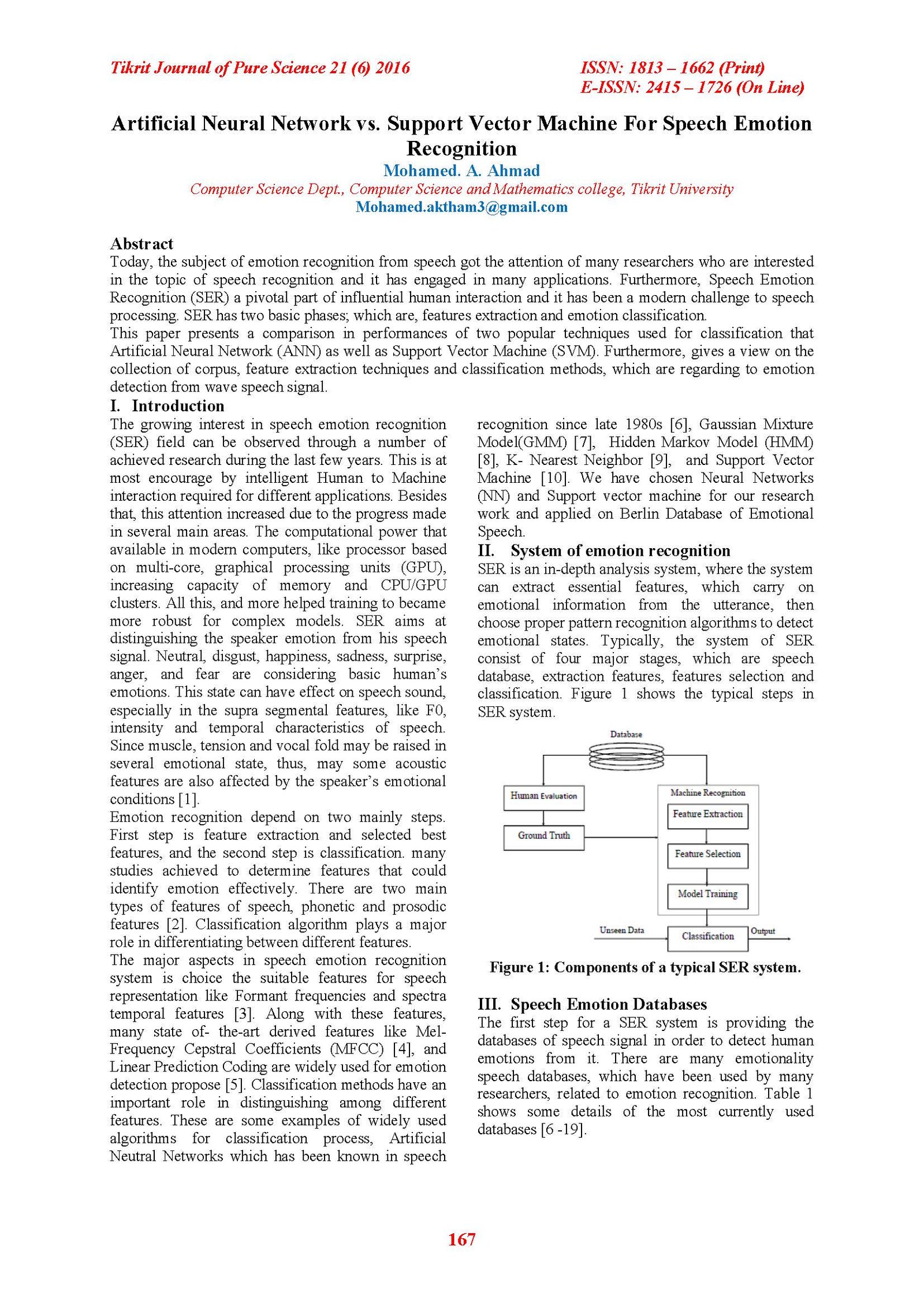

Today, the subject of emotion recognition from speech got the attention of many researchers who are interested in the topic of speech recognition and it has engaged in many applications. Furthermore, Speech Emotion Recognition (SER) a pivotal part of influential human interaction and it has been a modern challenge to speech processing. SER has two basic phases; which are, features extraction and emotion classification.

This paper presents a comparison in performances of two popular techniques used for classification that Artificial Neural Network (ANN) as well as Support Vector Machine (SVM). Furthermore, gives a view on the collection of corpus, feature extraction techniques and classification methods, which are regarding to emotion detection from wave speech signal.

Article Details

This work is licensed under a Creative Commons Attribution 4.0 International License.

Tikrit Journal of Pure Science is licensed under the Creative Commons Attribution 4.0 International License, which allows users to copy, create extracts, abstracts, and new works from the article, alter and revise the article, and make commercial use of the article (including reuse and/or resale of the article by commercial entities), provided the user gives appropriate credit (with a link to the formal publication through the relevant DOI), provides a link to the license, indicates if changes were made, and the licensor is not represented as endorsing the use made of the work. The authors hold the copyright for their published work on the Tikrit J. Pure Sci. website, while Tikrit J. Pure Sci. is responsible for appreciate citation of their work, which is released under CC-BY-4.0, enabling the unrestricted use, distribution, and reproduction of an article in any medium, provided that the original work is properly cited.

References

[1] Katagiri, S., “Handbook of Neural Network for Speech Processing," Artech House Signal Processing Library, October 2000.

[2] Yashaswi, M., Nachamai M. and Joy P., “A Comprehensive Survey on Features and Methods for Speech Emotion Detection,” In Proceedings of International Conference on Electrical, Computer and Communication Technologies (ICECCT) , IEEE, pp. 1-6, 2015.

[3] S. Wu, H. Tiago “Automatic Recognition Of Speech Emotion Using Long-Term Spectro-Temporal Features,” In Proceedings of 16th International Conference on Digital Signal Processing, IEEE, pp. 1-6, 2009.

[4] A. B. Kandali, S. Member, A. Routray, and T. K. Basu, “Emotion recognition from Assamese speeches using MFCC features and GMM classifier,” In Proceedings of TENCON 2008 - 2008 IEEE Region 10 Conference, IEEE, pp. 1-8, 2008. [5] Ververidis, D., and Kotropoulos, C., “Fast sequential floating forward selection applied to emotional speech features estimated on DES and SUSAS data collections,” In Proceedings of the International Conference on Signal Processing Conference, 4th European, IEEE, 8 Sept. 2006.

[6] Sweeta B., Amita D. “Emotional Hindi Speech: Feature Extraction and Classification,” In Proceedings of International Conference on Computing for Sustainable Global Development (INDIACom), IEEE, pp. 1865 - 1868, 2015.

[7] A. B. Kandali, A. Routray, and T. K. Basu, “Vocal emotion recognition in five languages of Assam using features based on MFCCs and Eigen Values of Autocorrelation Matrix in presence of babble noise,” Commun. (NCC), 2010 Natl. Conf., 2010.

[8] B. Schuller, G. Rigoll, and M. Lang, “Hidden Markov model-based speech emotion recognition,” Int. Conf. Multimed. Expo. ICME ’03. Proc. (Cat. No.03TH8698), vol. 1, pp. 1–4, 2003

[9] Y. Pan, P. Shen, and L. Shen, “Speech Emotion Recognition Using Support Vector Machine,” In Proceedings of Electronic and Mechanical Engineering and Information Technology (EMEIT), International Conference on (Volume:2 ) 621 - 625, 2012.

[10] M. Dumas, “Emotional Expression Recognition using Support Vector Machines.” In Proceedings of International Conference on Computing , IEEE, pp. 1865 - 1868, 2015.

[11] Yi-Lin, L. and Gang, W., “Speech emotion recognition based on HMM and SVM, ” In Proceedings of the International Conference on Machine Learning and Cybernetics Vol.:8, IEEE, 21 Aug. 2005.

[12] Hansen, J. and Bou-Ghazale, S., “Getting Started with SUSAS: A Speech Under Simulated and Actual Stress Database,” Proc. EUROSPEECH-97, Rhodes, Greece, Vol. 4, pp. 1743-1746, 1997.

[13] Hozjan, V., Kacic, Z., and Moreno, A., “Interface databases: Design and collection of a multilingual emotional speech database,” In Proceedings of 3rd International Conference on Language Resources and Evaluation, pp. 2024–2028, Canary Islands, Spain 2002.

[14] Breazeal, C. and Aryananda, L. “Recognition of affective communicative intent in robot-directed speech,” Autonomous Robots Vol. 12, Issue 1, pp. 83-104, 2002.

[15] Malcolm, S., and Gerald, M., “BabyEars: A recognition system for affective vocalizations,” Speech Communication Vol. 39, Issues 3–4, pp. 367–384, science direct, February 2003.

[16] Burkhardt, F., Paeschke A., and others, “A Database of German Emotional Speech,” In Proceedings of the Ninth European Conference on InterSpeech, pp.1517–1520, Citeseerx, 2005.

[17] A. Batliner, S. Steidl, E. Noth, “Releasing a thoroughly annotated and processed spontaneous emotional database: the FAU Aibo Emotion Corpus,” In Proceedings of the Corpora for Research on Emotion and Affect Workshop, 2008.

[18] Vinay, G. and Mehra, A., “Gender specific emotion recognition through speech signals,” In Proceedings of the International Conference on Signal Processing and Integrated Networks (SPIN), IEEE, pp. 727 – 733, 20-21 Feb. 2014.

[19] McKeown, G., Valstar, M. , Cowie, R. and more authors, “The SEMAINE Database: Annotated Multimodal Records of Emotionally Colored Conversations between a Person and a Limited Agent,” Affective Computing, IEEE Transactions on Vol. 3, Issue 1, IEEE, pp. 5 – 17, 09 April 2012.

[20] ElAyadi, M., Kamel, M. and Karray F., “Survey on speech emotion recognition: Features, classification schemes, and databases,” Pattern Recognition, Vol. 44, Issue 344, pp.572–587, 2011.

[21] Rong, J., Li, G., and Phoebe, P., “Acoustic feature selection for automatic emotion recognition from speech,” Journal of Information Processing and Management, Vol. 45, pp.315–328, 2009.

[22] Clavel, C., Vasilescu, I., and others, “Fear-type emotion recognition for future audiobased surveillance systems,” Journal of Speech Communic -ation, Vol. 50, Issue 6, pp. 487–503, 2008.

[23] Polzehl, T., Schmitt, A., Metze, F. and M. Wagner, “Anger recognition in speech using acoustic and linguistic cues,” Journal of Speech Communication, Vol. 53, pp. 1198–1209, 2011.

[24] Altun, H., Polat, G., “Boosting selection of speech related features to improve performance of multi-class SVMs in emotion detection,” Journal of Expert Systems with Applications, Vol. 36, pp.8197–8203, 2009.

[25] Bozkurt, E., Erzin, E., Erdem, C., and Erdem, A., “Formant position based weighted spectral features for emotion recognition,” Journal of Speech Communication, Vol. 53, pp. 1186–1197, 2011.

[26] Perez-Espinosa, H., Reyes-Garcia, C. and Villasenor-Pineda, L., “Acoustic feature selection and classification of emotions in speech using a 3D continuous emotion model,” Journal of Biomedical Signal Processing and Control, 2011.

[27] S. Wu, T.H. Falk, W.Y. Chan, “Automatic speech emotion recognition using modulation spectral features,” Journal of Speech communication, Vol. 53, pp.768–785, 2011.

[28] Guyon, I., Elisseeff A., “An Introduction to Variable and Feature Selection,” Journal of Machine Learning Research, Vol. 3, pp. 1157-1182, 2003.

[29] Xiao, Z., Dellandrea, E., Dou, W., and Chen, L., “Features extraction and selection for emotional speech classification,” In Proceedings of International conference on advanced video and signal based surveillance (AVSS), IEEE, pp. 411–416, 2005.

[30] Yashpalsing, C., Dhore, M., and Pallavi Y., “Speech Emotion Recognition Using Support Vector Machine,” International Journal of Computer Applications, Vol. 1, 2010.

[31] A. Jain , D. Zongker, “Feature selection: evaluation, application, and small sample performance,” In Proceedings of International Conference on Transactions on Pattern Analysis and Machine Intelligence (Volume:19, Issue: 2), IEEE, pp. 153-158, 2002.

[32] Yixiong, P., Peipei, S. and Liping, S., “Speech Emotion Recognition Using Support Vector Machine,” International Journal of Smart Home, Vol. 6, No. 2, April 2012. [33] Leif E., “K-nearest neighbor,” http://scholarpedia.org/article/K-nearest_neighbor, 2009. [34] Muzaffar, K., Tirupati, G., Mohmmed, N. and Ruhina Q., “Comparison between k-nn and svm method for speech emotion recognition,” International Journal on Computer Science and Engineering (IJCSE), Vol. 3, No. 2, Feb 2011.

[35] Yun, S. and Yoo, C., “Loss-scaled large-margin Gaussian mixture models for speech emotion classification,” Audio Speech and Language Processing, Transactions on, Vol. 20, no. 2, IEEE, pp. 585–598, 2012. [36] Schuller, B. and Rigoll, G., “Hidden Markov model-based speech emotion recognition,” In Proceedings of the Acoustics, Speech, and Signal Processing International Conference Vol. 2, IEEE, pp. 1-4, 6-10 April 2003. [37] J. Nicholson, K. Takahashi and R. Nakatsu, “Emotion Recognition in Speech Using Neural Networks,” Neural Computing and Applications Vol. 9, Issue 4, pp 290-296, Springer, 2000.

[38] M. Bhatti, W. Yongjin, and G. Ling, “A neural network approach for human emotion recognition in speech,” In Proceedings of the International Symposium on Circuits and Systems ISCAS’04, Vol. 2, pp. II–181–4, 2004.

[39] F. Eyben, M. Wollmer, and B. Schuller, “Open EAR - Introducing the Munich open-source emotion and affect recognition toolkit,” In Proceedings of 3rd International Conference on Affective Computing and Intelligent Interaction, ACII’09, IEEE, pp. 1–6, 2009.